Investors are accustomed to volatility in the semiconductor industry. But recent ups and downs have been especially discombobulating. On October 15th ASML, a supplier of chipmaking gear, reported that orders during its most recent quarter were only half what analysts had expected, causing its shares to plunge. Two days later TSMC, the world’s biggest chip manufacturer, reported record quarterly profits and raised its sales forecast for the year.

Those contrasting signals reflect the diverging fortunes of the chips needed for artificial intelligence (AI), for which demand has been “insane”, according to C.C. Wei, TSMC’s boss, and those needed for everything else, for which it is soggy. That pattern is reflected in memory chips. On October 7th Samsung, the market leader, issued a public apology for its lacklustre financial performance. On October 24th SK Hynix, which has surged ahead in the fast-growing segment of high-bandwidth memory (HBM) chips, which are needed for ai, reported a record profit.

HBM chips have become a vital component in the race to build more powerful and efficient AI models. Running these models requires logic chips that can process oodles of data, but also memory chips that can store and release it quickly. More than nine-tenths of the time it takes an AI model to respond to a user query is spent shuttling data back and forth between logic and memory chips, according to SK Hynix. HBMs are designed to speed this up by integrating a stack of memory chips together with the logic chips, boosting speed and reducing power consumption.

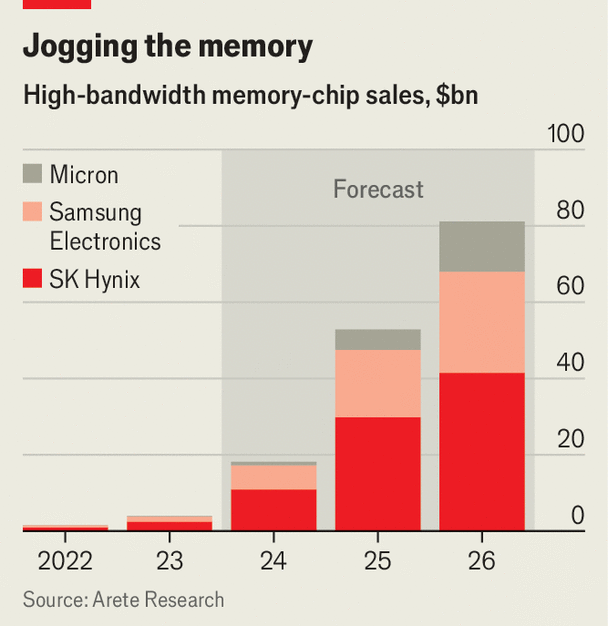

Arete Research, a firm of analysts, estimates that HBM sales will hit $18bn this year, up from $4bn last year, and rise to $81bn by 2026 (see chart). The chips are also highly profitable, with operating margins more than five times those of standard memory chips. SK Hynix, whose share price has more than doubled over the past two years, controls over 60% of the market, and more than 90% for HBM3, the most advanced version. Nam Kim, an analyst at Arete, says the company took an early bet on HBM chips, well before the AI boom. Its leadership has since been cemented by its close ties to TSMC and Nvidia, whose graphics processing units run most of the whizziest AI models.

HBM chips are now emerging as another bottleneck in the development of those models. Both SK Hynix and Micron, an American chipmaker, have already pre-sold most of their HBM production for next year. Both are pouring billions of dollars into expanding capacity, but that will take time. Meanwhile Samsung, which manufactures 35% of the world’s HBM chips, has been plagued by production issues and reportedly plans to cut its output of the chips next year by a tenth.

With a shortage of HBM chips looming, America is pressing South Korea, home to Samsung and SK Hynix, to restrict its exports of them to China. There are rumours that a further round of chip sanctions by America will include some advanced HBM versions. As demand for them rises, so too will interest from governments. ■

To stay on top of the biggest stories in business and technology, sign up to the Bottom Line, our weekly subscriber-only newsletter.